Project 2b: NGordNet (WordNet)

This project was more real-world like, open-ended, since the instructions ask for a specific outcome, but don't tell you how to get there.

WordNetPrerequisites to running the code. Additionally, you need to download the data files.

Instructions: https://fa22.datastructur.es/materials/proj/proj2b/

Solution: https://github.com/tomthestrom/cs61b/tree/master/proj2b

About the project

Great project without too many instructions on how to do it.

Included:

Designing & Implementing a Directed Acyclic Graph with an adjacency list.

Implementing lookups to support the functionality of the Graph.

Using the WordNet Dataset #

Before we can incorporate WordNet into our project, we first need to understand the WordNet dataset.

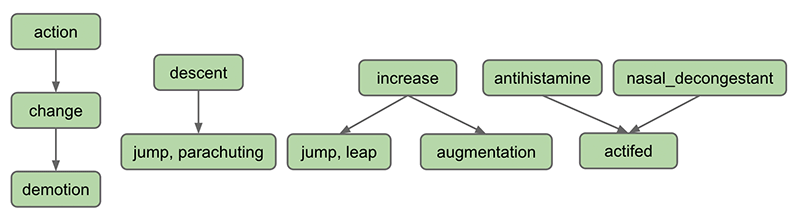

WordNet is a “semantic lexicon for the English language” that is used extensively by computational linguists and cognitive scientists; for example, it was a key component in IBM’s Watson. WordNet groups words into sets of synonyms called synsets and describes semantic relationships between them. One such relationship is the is-a relationship, which connects a hyponym (more specific synset) to a hypernym (more general synset). For example, “change” is a hypernym of “demotion”, since “demotion” is-a (type of) “change”. “ change” is in turn a hyponym of “action”, since “change” is-a (type of) “action”. A visual depiction of some hyponym relationships in English is given below:

Each node in the graph above is a synset. Synsets consist of one or more words in English that all have the same meaning. For example, one synset is “jump, parachuting” , which represents the act of descending to the ground with a parachute. “jump, parachuting” is a hyponym of “descent”, since “jump, parachuting” is-a “descent”.

Words in English may belong to multiple synsets. This is just another way of saying words may have multiple meanings. For example, the word “jump” also belongs to the synset “jump, leap” , which represents the more figurative notion of jumping (e.g. a jump in attendance) rather the literal meaning of jump from the other synset (e.g. a jump over a puddle). The hypernym of the synset “jump, leap” is “increase”, since “jump, leap” is-an “increase”. Of course, there are other ways to “increase” something: for example, we can increase something through “augmentation,” and thus it is no surprise that we have an arrow pointing downwards from “increase” to “augmentation” in the diagram above.

Synsets may include not just words, but also what are known as collocations. You can think of these as single words that occur next to each other so often that they are considered a single word, e.g. nasal_decongestant . To avoid ambiguity, we will represent the constituent words of collocations as being separated with an underscore _ instead of the usual convention in English of separating them with spaces. For simplicity, we will refer to collocations as simply “words” throughout this document.

A synset may be a hyponym of multiple synsets. For example, “actifed” is a hyponym of both “antihistamine” and “ nasal_decongestant”, since “actifed” is both of these things.

If you’re curious, you can browse the Wordnet database by using the web interface , though this is not necessary for this project.

Challenges, Description, Implementation

I decided to divide this section into the most significant challenges I faced.

Challenge 1 - Coming up with a design for the supporting graph

Description

The first challenge was coming up with a design for a Graph to support the requested operations. The data was provided in 2 types of text files:

File type #1: List of noun synsets. The file

synsets.txt(and other smaller files with synset in the name) lists all the synsets in WordNet. The first field is the synset id (an integer), the second field is the synonym set (or synset) , and the third field is its dictionary definition (also called its “gloss” for some reason). For example, the line

means that the synset

{ AND_circuit, AND_gate }has an id number of 36 and its definition is “a circuit in a computer that fires only when all of its inputs fire”. The individual nouns that comprise a synset are separated by spaces (and a synset element is not permitted to contain a space). The S synset ids are numbered 0 through S − 1; the id numbers will appear consecutively in the synset file. You will not (officially) use the definitions in this project, though you’re welcome to use them in some interesting way if you’d like if you decide to add optional features at the end of this project. The id numbers are useful because they also appear in the hyponym files, described as file type #2.

File type #2: List of hyponyms. The file

hyponyms.txt(and other smaller files with hyponym in the name) contains the hyponym relationships: The first field is a synset id; subsequent fields are the id numbers of the synset’s direct hyponyms. For example, the following line

means that the synset 79537 (“viceroy vicereine”) has two hyponyms: 38611 (“exarch”) and 9007 (“Khedive”), representing that exarchs and Khedives are both types of viceroys (or vicereine). The synsets are obtained from the corresponding lines in the file synsets.txt:

There may be more than one line that starts with the same synset ID. For example, in hyponyms16.txt, we have

This indicates that both synsets 12 and 13 are direct hyponyms of synset 11. These two could also have been combined on to one line, i.e. the line below would have the exact same meaning, namely that synsets 12 and 13 are direct hyponyms of synset 11.

You might ask why there are two ways of specifying the same thing. Real world data is often messy, and we have to deal with it.

Implementation

if we "remove" all the "noise", the data provided by the instructors was basically in 2 formats.

The first was a "map" an Integer ID associated with a String word.

The second was already sort of a "graph" since it provided an adjacency list in a form of an Integer ID as a parent/hypernym and an Integer list of 1 or more of it's children/hyponyms. I used an IdToIdsMap<Integer, HashSet<Integer>> within the Graph class to store these relationships.

I proceeded by creating a WordNet class which would act as an intermediary between the only required class in this exam - HyponymHandler - the class that handles queries from the frontend and the Graph class, for which WordNet acts as a wrapper.

In order to support lookup by words provided by the user and to be able to use the results of the lookup (all the ids associated with the word) to query the Graph, I implemented 2 HashMaps that acted as instance properties of the WordNet class:

A

HashMapWordToIdsMap<String, HashSet<Integer>>:Upon lookup returns a

HashSetof ids associated with the requested word, so that we can look them up in theGraph.A

HashMapofIdToWordsMap<Integer, HashSet<String>>:Upon lookup returns a

HashSetof words associated with the requested id, so that we can associate the results returned by querying theGraphwith actual words.

Challenge 2 - Handling List of Words

Description

More verbose description: https://fa22.datastructur.es/materials/proj/proj2b/#handling-lists-of-words

Based on the description of the Graph functionality in Challenge nr 1, it's not hard to understand how retrieving hyponyms for one hypernym works (check WordNet - getHyponyms method for the exact implementation).

The challenge of handling a list of words layed in only returning words that are the hyponyms of all of the provided (the list) hypernyms. If the user types “female, animal” in the words box. Then, clicking “Hyponyms” should display [amazon, bird, cat, chick, dam, demoiselle, female, female_mammal, filly, hag, hen, nanny, nymph, siren], as these are all the words which are hyponyms of female and animal.

Implementation

I implemented this in WordNet - getHyponymsIntersect, where I first call the getHyponyms method for each word on the list, save each result in a TreeSet. These TreeSets are stored in an array which I iterate over and use retainAll to determine which words (hyponyms) appear in all TreeSets.

The result is then returned to the user.

Challenge 3 - Handling k > 0

Description

The methods implemented in the previous challenges returned as many "proper" results as possible. However - what if the user wanted to specify the maximum amount of words they're interested in? In that case, I have to find a way to return the the top k most popular words (possibly in a certain time period).

Implementation

To get the k most popular hyponyms of a hypernym (possibly a list of hypernyms), I implemented the getKPopularHyponyms method in WordNet class.

getKPopularHyponyms

uses a

MaxPQfor sorting the hyponyms by popularity (weight) viaWordNet-getWordWeightPQWordWeightis apublic recordwith 2 props -StringwordandDoubleweightimplements

Comparableinterface -compareTouses theweightproperty for comparison

is the method that is ultimately used to handle all the requests (called in

HyponymHandler.handle), be it 1 or a list of hypernyms, a k = 0 or a k > 0.The data flow is such as:

HyponymHandler.handle => Wordnet.getKPopularHyponyms()

Summary

Those were the most challenging parts of the project, with the most challenging being designing a proper structure to represent the Directed Acyclic Graph that would represent the relationship between the hypernyms and hyponyms.

The only required part of the project was the HyponymHandler class and its handle method. The rest was to be decided by the students.

Extensive unit test were provided to test the HyponymHandler handle method and I also wrote some unit tests for the classes I implemented to verify their correctness.

Tests:

All tests can be found here: https://github.com/tomthestrom/cs61b/tree/master/proj2b/ngordnet/proj2b_testing. Additionally I'm linking the specific unit test I implemented above the code of each class.

Class HyponymHandler

Extensive tests for this class had already provided (see link above).

Class WordNet

Unit test: https://github.com/tomthestrom/cs61b/blob/master/proj2b/ngordnet/proj2b_testing/WordNetTest.java

Class Graph

Unit Test: https://github.com/tomthestrom/cs61b/blob/master/proj2b/ngordnet/proj2b_testing/TestGraph.java

Class IdToIdsMap

No test, class used within Graph whose proper functionality is tested.

Class WordToIdsMap

Class IdToWordsMap

Public record WordWeight

Last updated